UPDATE: I have open-sourced a Java implementation of the below principles called “RedisQ“. Enjoy!

Redis is a high performance key-value datastore that differs from other key-value solutions in the way it handles values. Instead of just storing values as simple strings, it recognizes multiple specific data types such as Lists, Sets, Hashes (maps), Strings or Numbers. Each data type has its own set of features to manipulate the data it contains in an atomic manner, making it an ideal tool for highly distributed system where concurrency is a potential issue.

Combining those features in creative ways allows for novel ways of doing “traditional” things differently. One particular combination has recently allowed my team and I to implement a moderately (read: good enough) reliable message delivery mechanism for multiple consumers consuming messages at their own pace.

The solution has advantages and some caveats, but if your problem allows you to live with the possible drawbacks, it’s a nice lightweight solution to a problem that is usually answered using some more traditional (and more complex) tools, like *MQs.

In a recent project, we ended up choosing Redis mostly because:

- It was already part of our architecture and we had a simple inter-component messaging use case but didn’t want to introduce a new component in our architecture just for this.

- Expected volume was low, which meant that our data set could fit in memory. Note: although Redis requires everything you store in it to fit in memory, it supports persistence to disk.

- Redis allowed for all of the implementation characteristics we were looking for, namely:

- Concurrency: Because all operations in Redis are atomic, supporting concurrency without too much of a hassle is straightforward.

- Persistence: Configured properly, we can ensure persistence of our queues to disk using one of the supported Redis persistence strategies.

- Lightweight: Using Redis from any language/platform is extremely simple and provisioning it / maintaining it on a production server is dead easy.

In this post, I will go over the strategy we used with regards to Redis data structures and operations for handling the message publishing and consuming.

The high-level strategy consists of the following:

- When each consumer starts up and gets ready to consume messages, it registers by adding itself to a Set representing all consumers registered on a queue.

- When a producers publishes a message on a queue, it:

- Saves the content of the message in a Redis key

- Iterates over the set of consumers registered on the queue, and pushes the message ID in a List for each of the registered consumers

- Each consumer continuously looks out for a new entry in its consumer-specific list and when one comes in, removes the entry, handles the message and passes on to the next message.

Why not use Redis Pub/Sub?

I already see you coming and asking why not using the Pub/Sub semantics supported out-of-the-box by Redis? The reason was two fold:

- What Redis offers with Pub/Sub is a listener model, where each subscriber receives each messages when it is listening, but won’t receive them when not connected.

- In a clustered environment where you have multiple instances of your consumer component running at the same time, each instance would receive each message produced on the channel. We wanted to make sure any given message got consumed once per logical consumer, even when multiple instances of this component are running.

Hence the name of this post “Reliable Delivery”, because we wanted to make sure every logical consumer eventually receives all messages produced on a queue once and only once, even when not connected – due to, for example, a deployment, a restart or a application failure/crash.

Detailed look at the strategy

Here’s a closer look at the different scenarios using a fictive example of an ordering system with multiple consumers interested in messages when new orders are created:

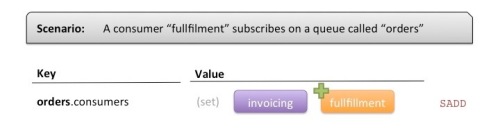

Registering a consumer

A “consumer” represents a logical entity of your architecture. You assign each concumer an identifier which it will use to register itself as a consumer on the queue.

Registering a consumer is only a matter of adding a Set entry to a key that is crafted with the name of the queue in it.

The semantics of a Set are helpful here: each consumer can just “add” an entry to the Set upon start up in a single operation, without the need to worry about any existing value.

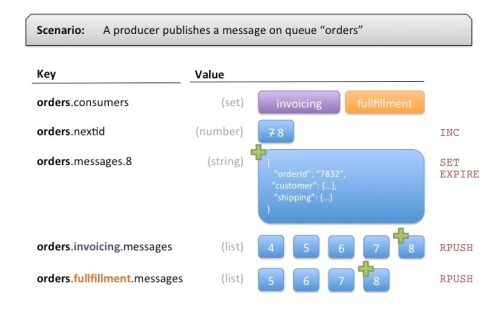

Publishing a message

On the Producer side, a few things need to happen when we’re publishing a message to a specific queue:

- The Producer increments a counter to get the next message ID using the INC command on key “orders.nextid”

- It then stores the message in a key containing the new message ID (“orders.messages.8” in our case). The actual format you store messages can be anything. We used a hash with some metadata information about each message, along with the actual payload. The payload can be serialized in JSON, XML or any format makes sense for your usage.

- Then for each consumer registered in key “orders.consumers”, it pushes the message ID using the RPUSH command on lists for each consumers.

To prevent duplication of message content in Redis, we store the content once and then only add references to the messages in consumer-specific lists. When a consumer consumes messages (more on that later), it will remove the ID from its list (its queue), then read the actual message content in a separate operation.

But what happens when all consumers have read the message? If we stopped here, each message would end up being stored in Redis forever. An efficient solution to this problem is to use Redis’ ability to expire (clean up) keys after some time using the EXPIRE command. Using a reasonable amount of time for the expiration makes up for a cheap cleanup process.

A slight variation, at a cost of message content duplication, would be to store the actual message content in each consumer-specific list. For simpler use cases where messages are small enough, this could be a compelling tradeoff.

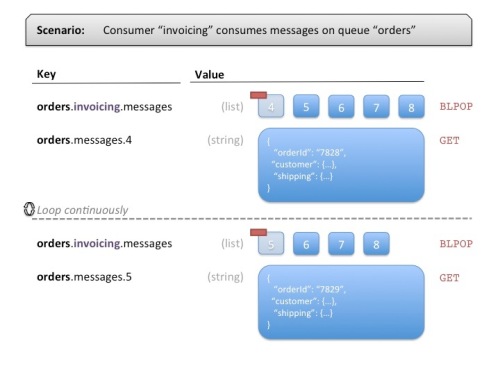

Consuming messages

Each consumer has a specific identifier and uses this identifier to “listen” on Lists stored in specially crafted Redis keys. Redis has this nice feature of “blocking pop”, which allows a client to remove the first or last element of a list, or wait until an element gets added.

Leveraging this feature, each consumer creates a thread that will continuously loop and do the following:

- Use BLPOP (blocking left pop) with a moderately small timeout to continuously “remove an element from the list or wait a bit”.

- When an element gets removed by the pop operation, read the message content and process it.

- When an element does not get removed (no message available), just wait and start over again.

You can have multiple threads or processes consuming messages with the same “consumer identifier” and the solution still works. This allows for both stability and scalability:

- You can spawn multiple consumers consuming messages as the same logical entity, and ensure that if one goes down, the consumption of messages does not stop.

- You can also spawn more consumers when needed for added horsepower.

Caveats

- The solution as described above does not support retryability of messages in case of a failure to process on the consumer side. I could imagine a way to do it using Redis, but one has to wonder if Redis is still the right tool if such a characteristic is required by your use case.

- The solution also does not guarantee that messages will be consumed in the order they were produced. If you have a single consumer instance you’re covered, but as soon as you have multiple consumer instances you cannot guarantee the ordering of messages. Maintaining a lock in a specific key for each consumer would enable this, at the cost of scalability (only 1 message can be consumed at any time throughout your consumer instances).

- If your producers and consumers are using different languages, you must implement this strategy for each platform/language. Fortunately, there are Redis client for pretty much any popular platforms.

Wrap up

There are many ways Redis can be leveraged using the simple data structures and atomic operations it provides. Using a particular combination of those, we’ve been able to implement a simple system that allowed reliable message delivery to multiple consumers in a clustered environment without too much of a hassle.

Because Redis was already part of our architecture, it proved to be a natural choice. The efforts required to build up this solution outweighed the efforts required to provision and maintain an additional component to manage our queues.

It might not be the most appropriate choice for your case. Be thoughtful in your architectural choices!

UPDATE: I have open-sourced a Java implementation of the above principles called “RedisQ“. Enjoy!

Yes I like this approach, and the connection dependent nature of Redis pub/sub is a clear drawback for the reasons you cited. So you’ve implemented this in a production setting? Can you offer any additional feedback on how it’s worked out over time. My one reservation is the long polling nature of such an approach especially if the message rate is high.

Hi Jeff! Yes, this was brought up to production and has been running for a few months now. Very stable, no issue whatsoever. As I mentioned in the article, it was for a low traffic use case that we implemented this (instead of using a full blown xMQ server). The polling nature of this solution seems to have minimal impact on performance: it’s pretty much like a heartbeat/keep alive. When there are messages, the “consumers” will consume them, when there is no message, they will poll at regular intervals (one second in our case) to see if new messages are in their queue. That 1 second interval is not producing any significant overhead on both the application or the network. The Redis protocol probably helps as it is very, very lightweight.

David you said that you interate “over the set of consumers registered on the queue, and pushes the message ID in a List for each of the registered consumers”.

Would you see this as a bottleneck if the number of consumers was large? I was thinking the publisher might keep a local cache of the consumers – but adminately this begs the question of how you would detect new consumers (perhaps poll the cosumer set from time to time).

Could be a bottle neck if the number of consumers is really large, yes.

Fetching a list of short strings (like consumer IDs) in Redis is quick. You could very well use a cache on the producer side, but as you’re suggesting, you would not be able to detect new consumers as soon as they are online and ready. What you could imagine doing is to use the pub-sub system in Redis to notify producers every time a consumers comes up so that the producer(s) refresh their caches.

As with anything else, make sure Redis is really the tool you need for your case. Modern MQs do a wonderful job at handling high volume, persistence and integrity. ZeroMQ and RabbitMQ – both implement the popular AMQP protocol, but ZeroMQ has no persistence built-in – could also be viable low-cost options.

Pingback: Building a Message Queue in Redis | Eric Perry

Hi David! Have you possibly published this as open source library?

Nope sorry. It eventually could, but I need to sort out a few details first.

Pingback: Reliable Queueing in Redis (Part 1) | Engineering @ Bronto

Reading this 2 years after it was originally published. How has this solution scaled for you in production over the last two years? Did you eventually end up replacing it with an xMQ solution? If you did, would you suggest building out this lightweight Redis queue, in hindsight?

(Evaluating options for my product with similar constraints).

This Redis-based queueing solution is still in place and kicking in production as I write these words. It’s been very stable and we haven’t had a single issue with the principle. We’re also using RabbitMQ with great success, so I don’t think it’s a question of one-or-the-other.

The general guideline we’ve come up with after a few years of experience is this: we use Redis-based queues for supporting processing internal to the application boundaries (to support processing of tasks across multiple application instances in a cluster, for example), whereas we use xMQ to cross application boundaries.

In a multi-application architecture (for example, SOA or microservices), seeing Redis as being part of each application allows for better scaling strategies. You can start small by sharing a single Redis instance across all your services. If an application starts consuming too much of that Redis instance’s resources, you can spawn another Redis that you’ll dedicate to that specific application without affecting the other services.

You can of course adopt a similar strategy with xMQ solutions, but scaling an xMQ solution involves more effort and resources than scaling Redis, I think.

Pingback: Reliable Queueing in Redis (Part 1) | Engineering

Reblogged this on Xinyustudio.

Hi, thank you for the article!

Which program you draw these pictures?

@roman, I used Powerpoint to draw these.

Hi David, thanks for sharing this.

I plan to use this architecture in our application but we need retryability support in case of a consumer failure.

As you said, it’s possible to implement it using Redis but I’m also wondering if it’s the right choice.

Would you go for RabbitMQ or another solution in this case?

I’m just afraid of having too much working setting up and maintaining RabbitMQ instead of a simple Redis with AWS Elastic Cache. I could also use AWS SQS but we will need to create too many queues. I prefer the way you can dynamically do this using Redis as you showed.

Thanks!

Hey Tulio! I have implemented retry support with the Redis queuing mechanism described above and it ended up being quite simple, but you need to be careful as to make sure your retry strategy is solid (queues are often critical components of applications, and I’m guessing if you need retries you’re probably having such critical requirements). The retry mechanism I have implemented using Redis has been working reliably over time.

In my experience setting up a RabbitMQ cluster is quite simple to set up. I would not run RabbitMQ as a single instance for production unless queues aren’t critical, but I would definitely recommend RabbitMQ as a cluster. That comes with higher requirements for your production servers, something to balance in your reflection.

No clear answer on that one as I think the final choice is yours. You’ll have to balance the efforts it will take to build and maintain the functionality using a Redis client vs installing and maintaining a RabbitMQ cluster over time. Both options are viable. If you’re using Ruby or Python, there are libraries that are readily available to do queuing with Redis: https://github.com/resque/resque (Ruby) and http://www.celeryproject.org/ (Python)

Hey David. Nice read 🙂 I’ve got two comments/questions:

> when there is no message, they will poll at regular intervals (one second in our case)

What is the advantage of polling every second instead of simply relying on BLPOP’s blocking behavior (having it wait for new data)?

> The solution as described above does not support retryability of messages in case of a failure to process on the consumer side.

Wouldn’t BRPOPLPUSH help in this case? (see section “Pattern: Reliable queue” in http://redis.io/commands/rpoplpush )

Thanks

@Marc: Regarding the polling, you should never have a thread in your application blocking indefinitely as it will not allow the process to be gracefully shutdown without a hard kill. BLPOP, if given no timeout (a value of 0), will block indefinitely. By specifying a timeout, you can wrap the BLPOP calls in a loop and allow for the code to check if it should end the loop when the thread has been requested to stop.

Regarding BRPOPLPUSH, it might help on retryability! The article was to describe how I used Redis to implement a simple queuing mechanism that did not require more advanced MQ systems. If your requirements include retryability, I would advise looking for other, more robust tools that guarantee that kind of thing. 🙂

I have used BLPOP with a timeout, works great! Subsequently, I have discovered there are some higher-level wrapper apis that abstract away the drudgery of popping off messages into a handler. Notably in my case was the Springframework’s Spring Integration RedisQueueInboundChannelAdapter. http://docs.spring.io/spring-integration/reference/html/redis.html#redis-queue-inbound-channel-adapter